I’d like to say that the writing that had the most profound effect on me this year was some classic novel I picked up in my spare time, but in fact it was an Associated Press article. Last June, AP Medical Writer Mike Stobbe wrote a fascinating, harrowing story about large holes dug in beach sand that can collapse “horrifyingly fast” and cause a person in the hole to drown. Stobbe described one case when a teenager ran back to catch a football, fell in a hole and disappeared under a cascade of sand. When his friends approached to help, more sand caved over him. Buried for fifteen minutes, he suffocated. Stobbe discloses in the article that, while they’re virtually unheard of, collapsing sand holes are actually more common than “splashier threats” like shark attacks.

I read the story right before going on vacation with my family. To the beach. Sometimes, while digging my toes into the wet sand at water’s edge, the story trespassed on my mind. I found myself scanning the beach for holes left behind by beachgoers who didn’t know about the monster in the sable but unstable sand. I wondered why I would voluntarily provide my children shovels and pails — the very tools of their demise. I’m actually worried about the beach — the beach! — swallowing up my kids.

And that’s not all I’m worried about. After a summer of sand terror, and tracking mosquitoes with Triple-E and dead birds with West Nile Virus, I fretted to see a constant stream of headlines like…

- Brain-eating amoeba kills 6 this year

- Drugmakers recall infant cough/cold medicine

- ConAgra shuts down pot pie plant because of salmonella concerns

- Listeria precaution prompts recall of chicken & pasta dish

Then came the Great Lead Recalls of 2007, when parents learned that everything from toys to tires is laden with toxic heavy metal. Oh, and my toothpaste might have a chemical in it that’s usually found in antifreeze and brake fluid.

Also, MRSA, the so-called superbug that resists antibiotics, is “more deadly than AIDS” and a new strain of adenovirus means that now the common cold can kill me. Also, my Christmas lights have lead in them. Finally, I found Boston.com’s page called “Tainted Food, Tainted Products” where I could track all of the products that were potentially deadly to me including everything from mushrooms containing illegal pesticides to lead-bearing charity bracelets. Charity bracelets!

It’s enough to make you want to hide from the world in your basement — provided of course you’ve tested it for excessive levels of radon.

In many ways, 2007 was The Year of Disclosure.

When this idea first came to me, I wasn’t thinking about the sand. I was thinking about information security, as I was writing a disheartening story about serious malware threats while also studying some of the thousands of data breach disclosure letters that were issued this year, in response to the 38 states the created mandatory disclosure laws.

But then, throughout the fall, I started to notice that risk disclosure was turning into one of those news phenomena that eventually earns its own graphic treatment and theme music on cable news. It earned landing pages on Web sites with provocative names like “Tainted Food, Tainted Products.”

Media teems with more risk disclosure than ever — an endless stream of letters about identity theft, disclaimers in drug commercials, warnings on product labels, product recalls and, of course, news stories.

But it’s not just the volume of disclosure but also its changing nature that’s wearing me down. Disclosure is more pre-emptive than ever. We know about risks before they’re significant. Many of the state data breach disclosure laws, for example, mandate notification even when it’s merely possible that private information was compromised.

Even more bizarre and stressful, disclosure is becoming presumptive. The cough medicine recall, for example, involved a product that a consumer advocate said was safe when used as directed. The risk that forced cough syrup off the shelves was that if you give a child too much medicine, it could lead to an overdose, which is reflexively obvious. ConAgra’s pot pie shut down also involved a product that company officials declared posed no health risk if cooked as directed). These disclosures, and the subsequent costly recalls are based on the idea that not following directions is dangerous.

The most insidious change is with the rare but spectacular risks, the sensational tales of brain-eaters and sand hole killers. Such stories have always existed, of course, but something is different now, and that’s the internet. Ubiquitous access combined with the bazaar potential publishers means the freakiest event can be shared by millions of people. Anyone can read about it, blog about it, link to it, forward it in email, and post it as a video. But there’s no impetus for publishers to disclose the risk responsibly or reasonably. Their agenda may even call for them to twist the truth, make the risk seem more or less serious than it is.

Here’s the paradox that rises out of all this: As an individual and consumer, I like disclosure. I want every corporate and civic entity I place trust in to be accountable. I want journalists and scientists to unearth the risks I’m not being told about. At the same time, while any one disclosure of a threat may be tolerable, or even desirable, the cumulative effect of so much disclosure is, frankly, freaking me out.

So I started to wonder, at what point does information become too much information? Is more disclosure better or is it just making us confused and anxious? Does it enable better decisions or just paralyze us? What do the constant reminders of the ways we’re in danger do to our physical and mental health?

To answer these questions, I sought out two leading experts on risk perception and communication: Baruch Fischoff and Paul Slovic, both former presidents of the Society of Risk Analysis. I told them that I wanted to understand risk perception and communication better, to see if they’d researched the effect of this ubiquitous access to risk information, and what we could do about the disclosure paradox.

But really I was hoping for some salve. Some way to stop worrying about sand holes at the beach.

“It’s a really difficult topic,” says Fischoff. “On the one hand you want disclosure because it affirms that someone is watching out for these things and that the system is catching risks, but on the other hand, there’s so much to disclose, it’s easy to get the sense the world is out of control.”

Little research exists on the physical health effects of any risk disclosure, never mind the cumulative effects of all of it. Certainly media saturation in general is being blamed for increased anxiety, stress and insomnia, gateways to obesity, high blood pressure depression and other maladies.

The mental health effects of so much disclosure, though, are reasonably well understood. Research suggests that knowing about all risks all the time is not only unproductive, but possibly counterproductive.

To understand how, I was sent to look up research from the late 1960s, when some psychologists put three dogs in harnesses and shocked them. Dog A was alone and was given a lever to escape the shocks. Dogs B and C were yoked together, and Dog B had access to the lever but Dog C did not. Both Dog A and Dog B learned to press the lever and escape the shocks. Dog C escaped with Dog B but he didn’t really understand why. To Dog C the shocks were random, out of his control. Afterward, the dogs were shocked again, but this time they were alone and each was given the lever. Dog A and Dog B both escaped again, but Dog C did not.

Dog C curled up on the floor and whimpered.

After that, the researchers tested the idea with positive reinforcement, using babies in cribs. Baby A was given a pillow that controlled a mobile above him. Baby B was given no such pillow. When both babies were subsequently placed in cribs with a pillow that controlled the mobile, Baby A happily triggered it; Baby B didn’t even try to learn how.

Psychologists call this behavior “learned helplessness” — convincing ourselves that we have no control over a situation even when we do. The experiments arose from research on depression, and the concept has also been applied to understand torture. It also applies to risk perception. Think of the risks we learn about every day as little shocks. If we’re not given levers that reliably let us escape those shocks (in the form of putting the risk in perspective or giving people information or tools to offset the risk, or in the best case, a way to simply opt out of the risk), then we become Dog C. We learn, as Fischoff said, that the world is out of our control. What’s more, sociologists believe that the learned helplessness concept transfers to social action. It not only explains how individuals react to risk, but also how groups do.

My favorite learned helplessness experiment is this one: People were asked to perform a task in the presence of a loud radio. For some, the radio included a volume knob, while for others no volume knob was available. Researchers discovered that the group that could control the volume performed the task measurably better, even if they didn’t turn the volume down. That is, just the idea that they controlled the volume made them less distracted, less helpless and, in turn, more productive.

Control is the thing, both Fischoff and Slovic say. It’s the countervailing force to all of this risk disclosure and the learned helplessness it fosters.

We have many ways of creating a sense of control. One is lying to our selves. “We’re pretty good at explaining risks away,” says Slovic. “We throw up illusory barriers in our mind. For example, I live in Oregon. Suppose there’s a disease outbreak in British Columbia. That’s close to me but I can tell myself, ‘that’s not too close’ or ‘that’s another country.’ We find ways to create control, even if it’s imagined.” And the more control — real and imagined — that we can manufacture, Slovic says, the more we downplay the chances a risk will affect us.

Conversely, when we can’t create a sense of control over a risk, we exaggerate the chances that it’ll get us. For example, in a column (near the bottom), Brookings scholar Gregg Easterbrook mentions that parents have been taking kids off of school buses and driving them to school instead. Part of this is due to the fact that buses don’t have seat belts, which seems unsafe. Also, bus accidents provoke sensational, prurient interest; they make the news far more often than car accidents, making them seem more common than they are.

Yet, buses are actually the safest form of passenger transportation on the road. In fact, children are 8 times less likely to die on a bus than they are in a car, according to research by the National Highway Traffic Safety Administration (NHTSA). That means parents put their kids at more risk by driving them to school rather than letting them take the bus.

Faced with those statistics, why would parents still willingly choose to drive their kids to school? Because they’re stupid? Absolutely not. It’s because they’re human. They tend to think that they themselves won’t get in a car accident; no one thinks they’re going to get into an accident, because they’re driving. They’re in control. At the same time, they dread the idea that something out of their control, a bus, could crash.

Dread is a powerful force. The problem with dread is that it leads to terrible decision making.

Slovic says all of this results from how our brains process risk, which is in two ways. The first is intuitive, emotional and experience-based. Not only do we fear more what we can’t control, but we also fear more what we have seen or can imagine. Eat a berry, get violently ill. See the berry again, remember the experience, don’t eat the berry. This seems to be an evolutionary survival mechanism. In the presence of uncertainty, fear is a valuable defense. Our brains react emotionally, generate anxiety and tell us, Remember the news report that showed what happened when those other kids took the bus; don’t put your kids on the bus.”

The second way we process risk is analytical: we use probability and statistics to, essentially, override, or at least prioritize, our dread. That is, our brain plays devil’s advocate with its initial intuitive reaction, and tries to say, I know it seems scary but eight times as many people die in cars as they do on buses, and in fact, only one person dies on a bus for every 500 million miles buses travel. Buses are safer than cars.

Unfortunately for us, intuitive risk processors can easily overwhelm analytical ones, especially in the presence of those etched-in images, sounds and experiences. Intuition is so strong that if you presented factual risk analysis about the relative safety of buses over cars to someone who had seen a bus accident, it’s possible that they’d still choose to drive their kids to school, because their brain has washed them in dreadful images. It sends fear through them. They feel the lack of a control over a bus compared to a car that they drive. A car just feels safer. “We have to work real hard in the presence of images to get the analytical part of risk response to work in our brains,” says Slovic. “It’s not easy at all.”

And we’re making it harder. Not only does all of this risk disclosure make us feel helpless, but it also gives us ever more of those images and experiences that trigger the intuitive response without analytical rigor to override the fear. Slovic points to several recent cases where reason has lost to fear: The sniper who terrorized Washington D.C.; pathogenic threats like MRSA and brain-eating amoeba. Even the widely publicized drunk-driving death of a baseball player this year led to decisions that, from a risk perspective, were irrational.

The most salient example of the intuitive brain fostering bad decision making is terrorism, which produces the most existential dread of all. It can be argued that decisions following 9/11 were poor, emotional and failed to logically address the risks at hand. What’s more, those decisions took necessary but limited resources away from other risks more likely to affect us than terrorism. Like hurricanes.

Or even sunburns. If you ask a friend what they’re more afraid of, getting five sun burns or getting attacked by terrorists, many, or most, will say terrorism.

That’s intuitive. Terrorism is, well, terrifying. But it’s also exceedingly rare. In this excellent paper, University of Wisconsin Professor Emeritus Michael Rothschild dares to conjure the awful to make an important point. He shows that if terrorists hijacked and destroyed one plane every week, and you also took one plane trip every month in that same time, your odds of being killed in those terrorist attacks are just one in 135,000. Miniscule.

Even if that implausible scenario played out you would still be about 900 times more likely to get skin cancer next year, and 4.5 times more likely to die from skin cancer next year (one in 30,000) if you’ve had five sun burns in your life (one in 150).

But that doesn’t matter. For sun burns, we have all kinds of ways to exert control, make us feel less helpless: hats, perceived favorable genetic histories, SPF 50, and self-delusion — “My sun burn isn’t as bad as that guy’s.” We have volume knobs for that radio.

For terrorism, we have no volume knobs. So we create them: We build massive bureaucracies and take our shoes off at the airport.

Another, better way to create those controls is to kindle that analytical part of our brains. Provide as much factual context about the risk we’re disclosing as possible, just as Rothschild did. The more data we can take the time to analyze, the more we can override our dread with a deeper understanding of reality.

Unfortunately, ubiquitous publishing on the internet and other broadcast channels makes risks appear both more random and more likely than they really are. Without context, we are anxious. We lack of control. We curl up and whimper.

The lack of control over who discloses what risks and how they do it has actually fostered less context and fewer useful statistics, not more. The news is a particularly poor vector for an analytical approach to risk processing, because analytical risk processing requires time and space to explore. And it involves statistics, a discipline most of us are poorly equipped to understand.

It also sometimes runs counter to the publisher’s agenda. “Brain-eating amoeba kills 6” gets me to click. “Rare bacteria with infinitessimal 50 million-to-one chance of affecting you” — not so much.

Fischoff believes another factor at play is the myth of innumeracy. “There’s an idea that people can’t handle numbers, but actually there’s no evidence to support that,” he says. “I believe there’s a market for providing better context with risks.”

Slovic is less certain. He says numeracy varies widely, both because of education and predisposition. “Some people look at fractions and proportions and simply don’t draw meaning from them,” he says. To that end, Slovic thinks we need to create images and experiences that help us emotionally understand the risk analysis, that make us feel just how rare certain risks are. Make the analytical seem intuitive.

It’s difficult to do this well. For example, Slovic remembers once when he was trying to explain chemical risks that occurred in the parts-per-billion range. People can’t relate to what a part-per-billion means, “so we said one part per billion is like one crouton in a thousand-ton salad,” he says. He thought people would get that. It backfired. Rather than downplaying the risk, people exaggerated it. Why? “Because I can imagine a crouton,” says Slovic. “I can hold it in my hand, and eat it. I can’t really picture what a thousand ton salad looks like. So I focus on the risk, the crouton, because I can’t compare it to a salad that I can’t even imagine.”

Another common mistake when trying to put risk in context is to focus on multipliers instead of base rates, says Fischoff. For example, deaths by brain eating amoeba have tripled in the past year. Yikes! That’s scary, and good for the evening news. But the base rate of brain-eating bacteria cases, even after the rate tripled, is six deaths. One in 50 million people. That’s less scary and also less interesting from the prurient newsman’s perspective.

But even that “one in 50 million” characterization proves problematic. It sounds tiny, but still causes us to exaggerate the risk in our minds, a phenomenon called “imaging the numerator.” Slovic describes one experiment that showed the dramatic effect of imaging the numerator: Psychiatrists were given the responsibility of choosing whether or not to release a hypothetical patient with a violent history. Half the doctors were told the patient had a “20 percent chance” of being violent again. The other half were told the patient had a “one in five” chance of being violent again.

The doctors in the “one in five” group were far more likely not to release the patient. “They lined up five people in their minds and looked at one of them and saw a violent person.” They imaged the numerator. On the other hand, 20 percent is an abstract statistic that hardly seems capable of violence.

It sounds illogical but our minds treat “one in five” as riskier than “20 percent.”

Especially with parents, one of those illusory controls we use to manage so much risk disclosure is to look at our own experiences, or lack of experiences. To wit, “I had lead toys as a kid and, well, I turned out okay.” In fact this is precisely what I told myself when I scanned the beach for killer sand holes. Look, I had spent parts of every summer— years of my life — at the beach, and, well, I turned out okay. In fact, I had never witnessed, never even heard of the collapsing sand phenomenon.

But I knew after talking to Fischoff and Slovic that this was mostly self-delusion, and not nearly enough for the analytical part of my brain to override the intuitive part. I’d need more to stop worrying about the beach eating my kids. So I decided to try to put the analytical part of my risk-processing system up against the myth. I decided to come up with some rigorous data, and relatable images that would, finally, scotch my dread.

The sand story quotes a Harvard doctor who witnessed a collapsing sand event and who has been making awareness of it his personal crusade ever since (the intuitive response to risk, like the parents pulling kids off the bus). The doctor even published a letter in the New England Journal of Medicine to raise awareness of “the safety risk associated with leisure activities in open-sand environments.” In the letter, he documents 31 sand suffocation deaths and another 21 incidents in which victims lived, occurring mostly over the past 10 years. He believes these numbers are low because he based them only on news reports that he could find.

Using rough math, let’s assume he’s right that there were many more cases than reported. Let’s double his figures, guesstimating 60 deaths and another 40 cases where victims lived over the past decade. That’s 100 cases total in ten years, or about ten sand accidents per year, including six deaths and four more where the victim lived. Of course, not everyone on the planet goes to the beach, so let’s be super-conservative and say that the beachgoer population comprised only one percent of the world population in the last year, about 60 million people (in fact it’s probably higher than this). That would make six deaths by sand per every 60 million beachgoers. That means, one in 10 million beachgoers are likely to die in a sand hole.

That seems unlikely, but how unlikely? I’m still imaging the numerator. I’m seeing that one kid die, that teenager playing football who disappeared before his friends’ eyes. I’m thinking of his mother, grieving, quoted in the news story desperately pleading that “people have no idea how dangerous this is.”

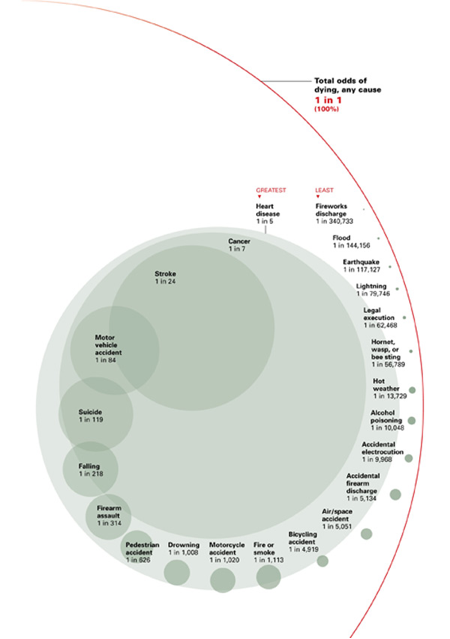

I need something better, some kind of image. I found this oddly pretty graphic, produced by the National Safety Council:

Here, the size of each circle represents the relative likelihood of dying by the cause listed inside that circle. One of every five deaths is from heart disease, so that circle is 20 percent of the overall circle (represented by the red arc), which represents chances of death by any cause, one in one. The stroke circle is about five times smaller than the heart disease one, as the risk is about one-fifth as common and just four percent of all deaths. The circles get smaller as causes of death get rarer. The smallest circle on this particular map is death from fireworks discharge, a fate suffered by one of every 350,000 people just a few pixels on the screen.

On the above graphic, the circle for suffocation by sand would be almost 30 times smaller than the fireworks circle. Invisible, which is fitting since experts call such risks “vanishingly small.” What’s more, the sand suffocation circle would be nearly 10,000 times smaller than the circle for “drowning.” In other words, when I’m at the beach, I should be thousands of times more worried about the ocean than the sand, and even then, not too worried about either.

But I’m still not quite convinced. The problem with vanishingly small risks is that they’re just that, hard to see, even in your mind. Once the circle disappears, it’s hard to understand how much smaller it’s getting.

So I need an image I can use to relate to all of the proportions. How about an Olympic-size swimming pool? If causes of death were represented by the 660,000 gallons of water in that pool, then heart attacks would take up 132,000 gallons, enough to fill about 15 tanker trucks. Death by fireworks discharge would take up roughly two milk jugs of the pool’s water.

And of all that water in that big swimming pool, dying in a sand hole would take up about 17 tablespoons, less than nine ounces. About as much as a juice glass.

I can’t control the rapid rise in risk disclosure, or the ubiquitous access to all of it. It’s probably not going to stop, and it’s unlikely that, in general, it will become more analytical. But now I know I can offset the cumulative effect it has on me, at least a little bit. I have that salve now. When dread creeps in, I can calm myself down. Maybe I’m still imaging the numerator — I can picture drinking that glass of water. It’s not impossibly small. But when I think about filling that pool, it does seem insignificant. I’ve made some progress understanding this impossibly remote risk. I can go to the beach without scanning for holes. I can let my kids play with their pails and shovels knowing they’ll be okay. Knowing that drowning in sand is, almost literally, nothing to worry about.